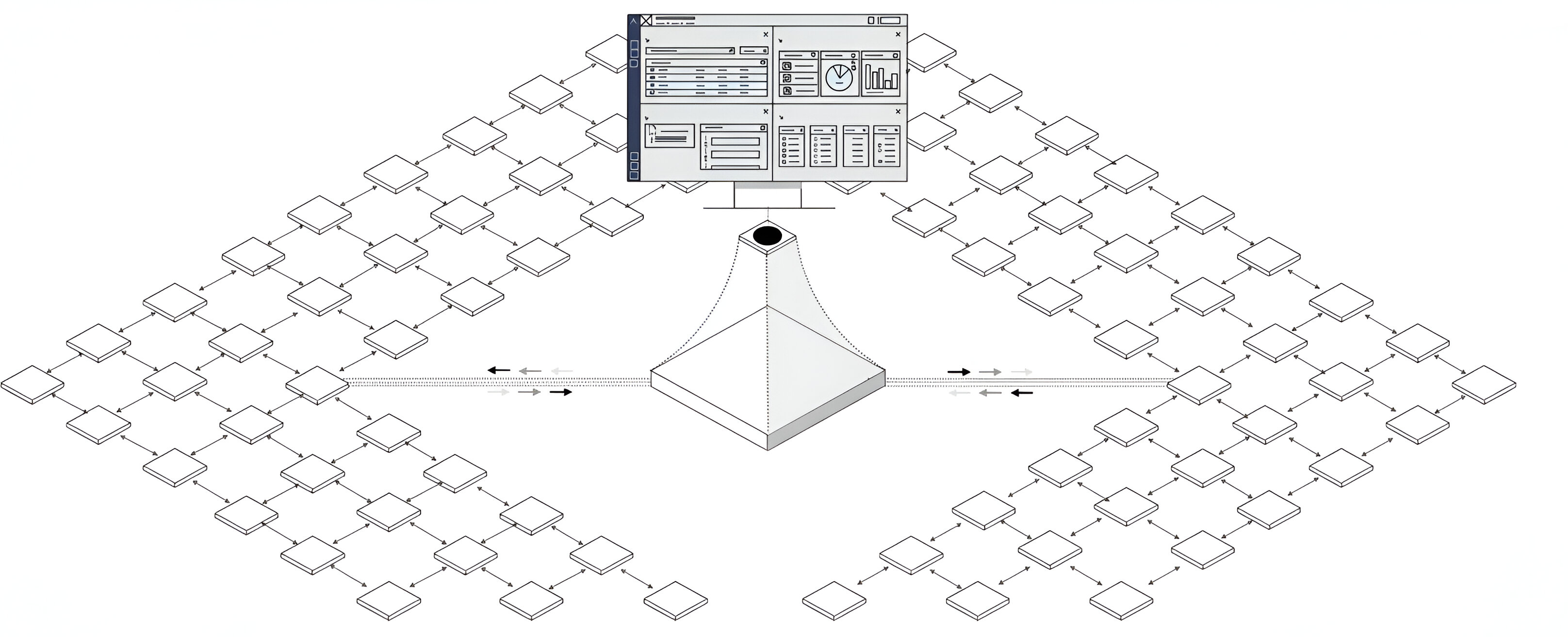

Data from the entire Internet, distilled by LLMs

Our ingestion and LLM orchestration flow

We connect distributed sources, normalize the data, and elevate it into a knowledge graph ready for analysis and decision‑making.

Where we source and orchestrate data

We ingest open sources, media, social, and your internal systems. Models clean, normalize, and link entities so every signal arrives contextualized and decision-ready.

Open Web

Public reports, benchmarks, and docs that we clean and normalize so they plug straight into your stack.

- Sources: sites, docs, PDFs, RSS with robots-safe scraping

- Transforms: HTML → JSON → Parquet with entity/date/geo tags

- Cadence: near-real-time refreshes for prices, SKUs, and specs

Generated sample

Source map & freshness

Sites & PDFsRSS feedsRobots-safeHTML → JSONEntity tagging

Freshness≤ 15 min

Dedup + provenance on every document.

Regions & languages

62 countries · 18 langs

Quality

Noise filtered 87%

From Data to Action

Develop bold strategies, seize opportunities, and lead with clarity and confidence.